Hello,

I’d like to optimize very specific scenarios where almost every directory is enumerated on an NTFS volume (on an HDD). When an application spends a considerable time reading a file with inefficient access patterns, a cheap trick can be utilized to speed it up: reading the whole file sequentially in big chunks beforehand into memory, so it gets cached by the system. Then the application’s reads will be fulfilled from memory by the cache manager, so it’s going to be really fast.

I thought I would employ the same “trick” for this problem, as well. However, it doesn’t seem like that the MFT can be accessed easily. Every code I could find on the internet opens a handle to the volume itself and performs raw reads on it. This works great in the sense that you can actually read the MFT or anything else on the drive, but it seems like that no caching is performed by the cache manager for these reads. I speculate this is because it’s not opened as a regular file.

Is there a way to either open the MFT as a regular file or somehow perform reads on it in big chunks in a way that will populate the file cache with its content?

1 Like

I’m also interested in this, I wanted to read the MFT attributes, it doesn’t seem to be quick way to do it, as far as I know you have to use FSCTL_GET_NTFS_VOLUME_DATA and then parse the sectors individually

NTFS effectively treats the MFT as a regular file and uses the Cache Manager to cache its contents. The file is available to user mode as “C:$Mft” but NTFS restricts you to only getting FILE_READ_ATTRIBUTES access.

When you read directly from the volume you bypass all of this caching because Windows caches on a file level. So, no, there is no supported way to do what you’re asking.

Thanks Scott, I’m sorry to hear that.

there is no supported way to do what you’re asking.

Is there an unsupported way, though? This is not something that we would like to ship in an end-user product or something, so if there’s a way that’s prone to breaking, that would still be acceptable.

If the answer is still no, would this be possible from a software driver? If so, could you give me some pointers?

The quasi-documented FSCTL_FILE_PREFETCH lets you prefetch the metadata for a file or the contents of a directory. This really requires that you know in advance what it is you want to prefetch though (easy for the prefetcher because it’s just speeding up the next time).

If you wanted a very large hammer presumably you could try loading a driver and call MmPrefetchPages yourself on the MFT. I haven’t ever tried this myself but it seems reasonably safe in that you’re not accessing the MFT in a way that might conflict with NTFS’ usage. The downside is that it only puts the pages into the standby state (instead of active) so it’s possible that for a sufficiently large MFT the start of the MFT could be paged out by the time you reached the end.

Note that just having the MFT resident isn’t necessarily going to speed up your directory scans as any sufficiently large directory will not be resident in the MFT.

As for FSCTL_FILE_PREFETCH, I think I’m better off with using NtQueryDirectory directly with a big enough buffer size (FindFirstFile is stubborn and uses a buffer size of 0x1000 even if I pass FIND_FIRST_EX_LARGE_FETCH, and only uses a bigger, fixed buffer of 0x10000 for subsequent FindNextFile’s). This speeds up the enumeration of larger directories, but the gains are not that significant.

As for MmPrefetchPages, I see that the READ_LIST structure (second parameter) should contain pointer(s) to FILE_OBJECT(s). Could you give me some guidance on how to acquire a FILE_OBJECT (ZwOpenFile etc. give me a regular HANDLE). Even if there is a way to get one, won’t the system prevent my driver to get one for the MFT specifically (as it does in user mode)?

@Donpedro said:

As for FSCTL_FILE_PREFETCH, I think I’m better off with using NtQueryDirectory directly with a big enough buffer size (FindFirstFile is stubborn and uses a buffer size of 0x1000 even if I pass FIND_FIRST_EX_LARGE_FETCH, and only uses a bigger, fixed buffer of 0x10000 for subsequent FindNextFile’s). This speeds up the enumeration of larger directories, but the gains are not that significant.

The point of the prefetch is that it brings the file/directory into memory using an efficient I/O pattern. Then when someone actually enumerates the directory it’s resident in memory and you avoid the page faults. You need to eat the disk I/O either way though (either up front or on demand).

As for MmPrefetchPages, I see that the READ_LIST structure (second parameter) should contain pointer(s) to FILE_OBJECT(s). Could you give me some guidance on how to acquire a FILE_OBJECT (ZwOpenFile etc. give me a regular HANDLE). Even if there is a way to get one, won’t the system prevent my driver to get one for the MFT specifically (as it does in user mode)?

ZwOpenFile + ObReferenceObjectByHandle. MmPrefetchPages does not require any specific access to the FILE_OBJECT so the fact that you can’t get FILE_READ_DATA doesn’t matter.

I finally got around to create a prototype (excuse the questionable code quality, it’s just a prototype after all): https://gist.github.com/Donpedro13/ec077d54a4c8d1ffbaea77fa781a8e73

I tried to test it on a VirtualBox Win 10 machine with sufficient resources assigned (16 GBs of RAM, etc.). There are several problems:

- When invoked on C:$Mft (around a ~100 MB file), MmPrefetchPages instantly returns STATUS_SUCCESS. However, there is no disk read activity afterwards (monitored manually for a few minutes), and the “Metafile”'s Standby column does not show any growth in RAMMAP

- When invoked on a small test file (a few bytes in size), an access violation is encountered in MiPfPrepareReadList, called by MmPrefetchPages internally. I turned on Driver Verifier (including the special pool), but it found no errors. Maybe I’m initializing the READ_LIST structure incorrectly? I’ve drawn out everything on a piece of paper and the “algorithm” seems correct, but I may be misinterpreting some fields or something (the documentation on READ_LIST is scarce)

I didn’t try to run it but the general idea looks right. The MFT might already be paged in…Try running RAMMap and emptying all the various working sets before running. Do you see I/Os then?

Interesting about the crash. I don’t think I’ve ever tried to call it on a file that small, it’s possible it just doesn’t work because prefetching doesn’t make much sense there.

Looks like I spoke a bit too early… The driver crashes regardless of file size (so not only for small ones). Here’s the call stack:

nt!DbgBreakPointWithStatus

nt!KiBugCheckDebugBreak+0x12

nt!KeBugCheck2+0x946

nt!KeBugCheckEx+0x107

nt!KiBugCheckDispatch+0x69

nt!KiSystemServiceHandler+0x7c

nt!RtlpExecuteHandlerForException+0xf

nt!RtlDispatchException+0x297

nt!KiDispatchException+0x186

nt!KiExceptionDispatch+0x12c

nt!KiPageFault+0x443

nt!MiPfPrepareReadList+0x60 <===============================

nt!MmPrefetchPagesEx+0x96

nt!MmPrefetchPages+0xc

MFTPrefetch!`anonymous namespace'::PrefetchPages+0x103

MFTPrefetch!DeviceControl+0x1dc

nt!IofCallDriver+0x55

nt!IopSynchronousServiceTail+0x1a8

nt!IopXxxControlFile+0x5e5

nt!NtDeviceIoControlFile+0x56

nt!KiSystemServiceCopyEnd+0x25

When invoked on an MFT, it does not crash, and the code still goes through nt!MiPfPrepareReadList. I’ve single stepped the assembly for a bit, and it doesn’t look like some early return branch is taken. As this case does not crash, and seemingly has no effect, I would have expected that either nt!MiPfPrepareReadList is not even entered or is returned from early.

I played with your code and two things:

- The crash happens if there isn’t already a section created for the file you’re trying to prefetch. You can fix it by getting a section handle to the file (ZwCreateSection) before calling MmPrefetchPages

- Using ProcMon I was able to see MmPrefetchPages generate paging reads to the MFT. However, NTFS immediately failed them with STATUS_INVALID_DEVICE_REQUEST. Stepping around in the debugger it looks like NTFS will only allow reads to the MFT if they are generated in the context of another I/O operation (e.g. while handling an IRP_MJ_CREATE a paging read of the MFT happens). Other than that any and all reads to the MFT are immediately rejected. I had assumed that NTFS would let paging reads to the MFT in for all cases but I was incorrect.

So, as written, NTFS will not let something external prefetch the MFT. Argh. I’d never called it on the MFT so my bad.

I can think of some horrible ways to work around this but I wouldn’t really want to do it anywhere but in a lab. If you just want to see if the performance boost is there you could always use the debugger to patch out the offending check in NtfsCommonRead.

Thanks for taking the time to investigate further, Scott.

For 1.), I opened an issue on GitHub, I think this “little detail” should definitely be mentioned in the docs.

For 2.), what was your filter in Process Monitor? I’ve both tried searching for “MFT” in the operation path, and STATUS_INVALID_DEVICE_REQUEST in “result”, but got zero results.

if you just want to see if the performance boost is there you could always use the debugger to patch out the offending check in NtfsCommonRead.

After investing a non-marginal amount of time in this whole topic, I’m more than interested in seeing the potential perf. benefits at least. Could you give me some pointers regarding this? I’ve tracked down where STATUS_INVALID_DEVICE_REQUEST comes from using “status debugging”, but looking at the disassembly, it looks like that this branch is taken from several locations inside NtfsCommonRead. I can reverse simple functions, this one, however, is both complex and in a domain that I’m not that familiar with (NT internals).

Thanks!

My Process Monitor filter was for contains $Mft. Two things:

- Make sure you’ve enabled Advanced Output. The reads will come from the System process and that’s filtered out in basic mode

- Before running the test empty all possible working sets using RAMMap. This guarantees that things aren’t paged in

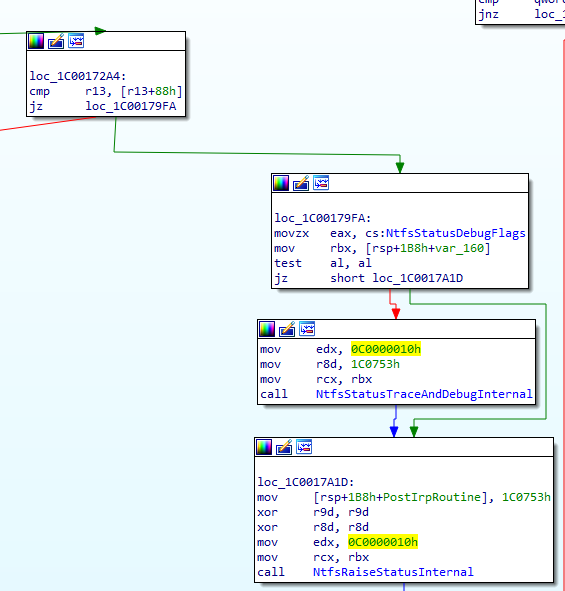

Which version of Windows are you looking at? In Win10 1909 I only see one path that gets you STATUS_INVALID_DEVICE_REQUEST:

That cmp instruction is what is getting in the way. It seems pretty consistent on other versions (2004 has a similar check, just a different register).