- Generatesine() function’s(Tone generate.cpp) buffer is sending to user application, and

The generated sine wave is seen by the fake microphone consumer, like Audacity. That app does not know that the stream is not coming from a real mic.

- whatever we speak in live mic is storing(Savedata.cpp) in local directory files.

This is absolutely not true. The sysvad fake speaker records whatever is being sent to it from an audio application. There is no connection at all, at the driver level, between the microphone and the speaker part of an audio driver, and there is certainly no connection between the microphone from your real hardware and the speaker part of sysvad. They are all totally independent streams.

Now, if you happen to have an audio application, like Audacity, that is reading from a live microphone and copying that data stream out to the sysvad fake speaker, then the recording will be the live mic data. That’s thanks to Audacity, not to the driver. If you had your MP3 player playing into the sysvad speakers, then sysvad would record the music.

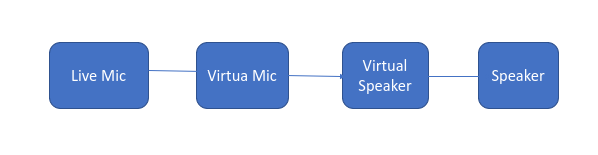

So, I called this process as feeding live mic data to fake mic.

But you are glossing over the details, which are considerable. You are also using the phrase “user application” rather loosely. There are two very different applications involved here. You have one innocent application using the standard audio APIs, which has been told to use the sysvad microphone and speakers. It doesn’t know there is anything funny going on. Then, you have your custom service application, which is using the live microphone and speakers, and as part of its processing is using custom APIs that you have to add to sysvad, in order to extract the speaker data from the innocent application and push filtered microphone data back in.

does the buffer “AudioBufferMdl” in CMiniportWaveRTStream::AllocateBuffer called as cyclic buffer?

Yes. In a typical WaveRT driver, that buffer is part of the audio hardware. The AudioEngine reads and writes directly from/to that buffer, with no driver involvement. Sysvad has to be involved in this case, because of course there is no hardware. WaveRT was designed for hardware with circular buffers, which is partly why I think it is a bad choice for a virtual device.

Is that buffer need to replace with generatesine() function’s buffer so that we can pass the processed data to skype.

Well, you can’t “replace” that buffer. That buffer is the place where the Audio Engine reads the fake microphone data and writes the fake speaker data. AudioEngine reads and writes that buffer on its own. Sysvad just updates pointers to tell the AudioEngine how much data is in the buffer (or how much room). In the sample, CMiniportWaveRTStream::WriteBytes is the spot where the GenerateSine application fills in more fake microphone data to send to Audio Engine. You need to replace that with something that copies your processed data to the buffer instead. That means, of course, that you have to have a way to get your processed data into sysvad so the driver can copy it to that buffer.