Hi all,

I’m another developer with no previous experience in driver development asking

for some help ![]()

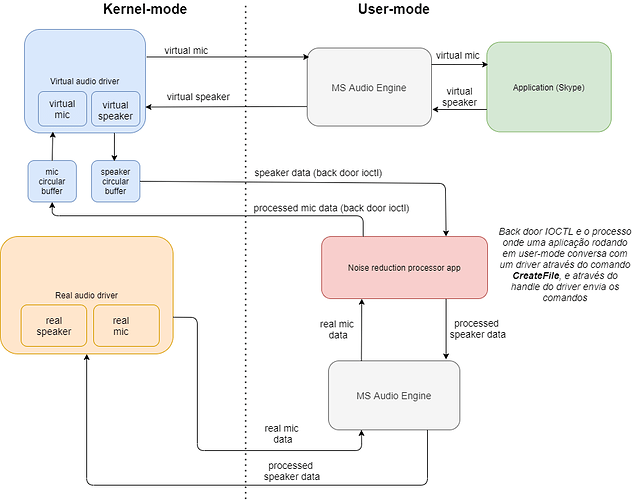

I landed in a new project where the main requirement is build a noise removal effect

that will be available system wide, for all applications that have access to soud input/output

devices lile Skype, Slack, MS Teams etc.

As Windows audio driver development is a broad field, I’m really lost so I started reading documentation and ended up in

the sysvad sample driver.

Looking more carefully in the sample code altoghether with documentation, I could not connect the points

about how to process the buffers from virtual devices and send the processed buffer to a real audio adapater.

After reading some threads in this forum, I found precious information that gave me a direction to start some

high level designing.

I’d like to share this design and, if possible, have some guidance

from you.

This can be a feasible approach for the solution i’m looking for?

There’s any better or easier way(s) to approach this problem?

Any information from you to put some light on this would be great!

Thanks

Yes, that’s a feasible approach. Several companies are already doing this. The hard work, of course, is creating the noise reduction algorithm. If you don’t already have that, then you’re really too far behind to be competitive.

@Tim_Roberts said:

Yes, that’s a feasible approach. Several companies are already doing this. The hard work, of course, is creating the noise reduction algorithm. If you don’t already have that, then you’re really too far behind to be competitive.

Hi Tim, thanks for your help!

About the processing app running in user-mode, I’m thinking to use Core Audio APIs to communicate with real audio devices:

https://docs.microsoft.com/en-us/windows/win32/CoreAudio/core-audio-apis-in-windows-vista

and, to communicate with Virtual Driver circle buffers as you mentioned in other posts, use

IOCTLs, CreateFile mechanism, something like this:

Is it possible to use a higher level API like Core Audio APIs, to access the the circle buffers as well?

Thanks

You can use whatever API you want to talk to the real audio devices. The Core Audio APIs are pretty easy to use.

Is it possible to use a higher level API like Core Audio APIs, to access the the circle buffers as well?

Nope. The Audio Engine has no idea there is a back door. Your driver has to simulate hardware circular buffers to satisfy the WaveRT interface, but you’ll need your own tracking for the back door.

got it. thanks for your help Tim! Let’s get hands dirty ![]()

Hi Tim, after some work I had a little progress about

communication between user-mode app and virtual driver

I’was possible to get the sub-device handle calling CreateFile.

I also created a dispatcher function in the virtual driver

to handle this class of IRPs:

DriverObject->MajorFunction[IRP_MJ_DEVICE_CONTROL] = NREngineHandler;

My first naive approach was, send a custom IOCTL from user-mode app to virtual driver and copy a chunk of data from the cyclic buffer represented by m_pDmaBuffer to a buffer allocated from user-mode app and then save it in a wav file:

bRc = DeviceIoControl(hDevice, (DWORD)IOCTL_NRIOCTL_METHOD_IN_BUFFERED, NULL, 0, &outputBuffer, outputBufferSize, &bytesReturned, NULL );

Of course, it does not work :)

I read in other threads from this forum that some additional cyclic buffers need to be created to hold copies from the original ones and then, send IOCTLs from user-mode app to copy from these auxiliary buffers instead of the original ones.

And also, the buffers copied along with notifications need to be enqueued in a mechanism like Inverted call model as describe here:

https://www.osr.com/nt-insider/2013-issue1/inverted-call-model-kmdf/

to notify the application that buffers were filled by audio engine and are "ready" to be read or written to/from user-mode application.

I was thinking to create a copy of cyclic buffers and then read/write then from:

CMiniportWaveRTStream::ReadBytes and CMiniportWaveRTStream::WriteBytes functions

but I'm not sure if it's the right way.

If you could provide some more details about this communication mechanism would help me a lot,

Thanks

Of course, it did not work

Why not? What did it do?

In the WriteBytes call, you have to push data into the WaveRT buffer that the audio engine can pull out later. That data has to come from somewhere. Since that buffer really “belongs” to the Audio Engine, you’ll probably need your own. Similar, in the ReadBytes call, you are told that the Audio Engine has shoved data into the WaveRT buffer that need to be consumed. Again, you’ll need someplace to put that data before the Audio Engine writes over it later.

Inverted call is fine, but you need to remember that this all happens in real time. You can’t hold things up waiting for response, in either direction. The Audio Engine assumes there is hardware at the other end of that buffer, hardware that is producing and consuming at a constant rate. Your app will need to keep up the circular buffers at a relatively constant level. You’ll be supplying data just in time, and you’ll need to pull the data out almost as soon as it gets there.

Why not? What did it do?

No sure how I can access m_pDmaBuffer buffer from my dispatcher so I tried this approach:

stream = static_cast<CMiniportWaveRTStream*>(_DeviceObject->DeviceExtension);

if (stream != NULL) {

DPF(D_TERSE, (“***waveRT stream address %p”, stream));

buffer = stream->m_pDmaBuffer;

if (buffer != NULL) {

DPF(D_TERSE, (“***buffer address %p”, buffer));

RtlCopyBytes(outputBuffer, buffer, outputBufferLength);

_Irp->IoStatus.Information = outputBufferLength;

}

}

But the pointer address I’m getting from dispatcher is not the same as from one I’m getting from ReadBytes function.

Anyway, I copied it into the buffer from my user-mode app and from there, saved it to a .wav file.

As I have no experience working with audio, I tried to play the saved file using VLC or Windows media player but it says

it’s a invalid audio file, I suspect that I need to perform some encoding before saved it to file

But I’m think encoding is not relevant when I’ll pass the data directly to live speakers by using WASAPI, is that correct?

I’m trying some approaches in a trying and error fashion and learning something in the process ![]()

Thanks for your help!

I’m not sure this is really a project for a beginner. There are a lot of things to know, and you do just seem to be hacking around.

By default, the device contexts belongs to the Port Class driver that wraps yours. Its contents are not knowable. You CERTAINLY cannot just assume that it happens to point to one of your streams – it doesn’t. Remember, the device context is global to the entire adapter. It has to manage filters and their pins and streams. You have to be very, very careful to think about what object you are working with, and what information it knows. The streams are the lowest level; they can find their parent filter, and the parent adapter object, but the reverse is not true – you can’t go deeper into the hierarchy.

If you want your own device context section, which you certainly do, then you need to tell port class to add some extra. You do that as the last parameter in the call to PcAddAdapterDevice. The port class’s context is PORT_CLASS_DEVICE_EXTENSION_SIZE bytes long, so you’ll pass PORT_CLASS_DEVICE_EXTENSION_SIZE+sizeof(DEVICE_CONTEXT), for whatever your context is.

Then, you’ll probably want a function called GetDeviceContext that takes a device object and returns to you the part of the device context that belongs to you: (DEVICE_CONTEXT*)((PUCHAR)DeviceObject->DeviceExtension + PORT_CLASS_DEVICE_EXTENSION_SIZE).

Your dispatcher cannot access the DMA buffer directly. Your dispatcher is a global which can get access to the adapter through the device context. At that point, you don’t know which filter, which pin, or which stream you’re talking to. Remember, your driver has multiple streams: at least one going in and one going out. You will need to set up your private circular buffers in the IAdapterCommon object, and remember a pointer to that in your device context. ReadBytes and WriteBytes are part of the stream objects. They can also get to the adapter object, which is your common hookup point. So, those functions will have to copy to/from the DMA buffer into your private circular buffers in the adapter object. Your dispatcher can then pull from the private circular buffers (again through the adapter object) and copy from/to your client.

Also remember that you will need one private circular buffer in each direction. The stream will need to know which direction it is going, and it’s easy to get those confused. You need to think about “am I speaker/renderer here, or am I microphone/capture here?” Each stream only worries about one of them, but your dispatcher will have access to both. You’ll also probably need a chart to remind you whether you a reading from or writing to the buffer. ReadBytes, for example, is called in the speaker/renderer path. It reads from the DMA buffer, and writes to the speaker circular buffer. Your corresponding ReadFile dispatcher, then, needs to read from the speaker/renderer circular buffer.

Thanks Tim for all those detailed information!

I was going to a completely wrong direction, I was trying to create the private buffer inside CMiniportWaveRTStream object and access it from

the dispatcher.

Now the process is a bit more clear for me. I don’t know the details

to implement this approach yet but you gave the overall idea and a good starting point. I’ll try to apply what you said and go deeper into this.

If I have more specific questions (certainly I will) I’ll post here with more details.

Thanks a lot!

Thanks for your help, I was able to copy dma buffer to the private buffer ![]()

I created a private buffer with 1MB size and from ReadBytes function, I’m filling it with data until it’s completely full.

To verify if the buffer it’s OK, I’m passing it to m_SaveData.WriteData function and, from the dispatcher, I’m copying the

data to client app through DeviceIoControl function.

The data saved by m_SaveData.WriteData function is a little bigger then 1MB and I can play the wav file generated in some

players like VLC, for example. The file I’m copying to client app has exactly 1MB size and is not possible to play it.

I think the data is ok because WriteData function performs extra processing by saving frames but the data copied

directly from DMA buffer is raw, that’s why is not correctly encoded to be played, is that correct?

A megabyte is 5 seconds worth of 48k stereo data. You don’t want to introduce 5 seconds of latency in your audio. For streaming, any buffer bigger than 8k is too much.

I’m guessing you don’t understand the format of a wave file. You can’t just save a bunch of bytes to a file and pass it to a player. The file has to have a specific set of headers that identify the type of file and the exact format of the audio data. The sysvad CSaveData class has code to create those headers. It’s easy. The only tricky part is that you have to go fill in the length of the file in a couple of places when the capture is complete.

got it! Thanks!

Hi Tim, how are you doing?

I finally managed to send some audio data to sysvad microphone, but the sound

has some ditortion. Let me tell what I’m doing in details:

1 - I’m using a sample program from MS CaptureSharedEventDriven, to capture audio

using the real microphone from my system.

2 - The mix information from the real microphone is:

Num channels: 2

bits per sample: 32

samples per second: 48000

avg bytes per second: 384000

Block align: 8

3 - The MS sample captures audio and saves it in a wave file so, I needed to change it a little to not add

the wave header and only save the raw data in a file.

4 - From my client app, I’m sending the captured raw buffer to sysvad

via IOCTL.

5 - From sysvad, I needed to set the default format of the virtual mic I’m using to same as the raw format:

{

WAVE_FORMAT_EXTENSIBLE,

2,

48000,

384000,

8,

32,

sizeof(WAVEFORMATEXTENSIBLE) - sizeof(WAVEFORMATEX)

},

STATIC_AUDIO_SIGNALPROCESSINGMODE_DEFAULT,

&MicArray2PinSupportedDeviceFormats[SIZEOF_ARRAY(MicArray2PinSupportedDeviceFormats)-1].DataFormat

5 - From WriteBytes, I’m copying data from private buffer to dmaBuffer on this way:

ULONG runWrite = min(ByteDisplacement, m_ulDmaBufferSize - bufferOffset);

if (m_pExtensionData != NULL && m_pDmaBuffer != NULL) {

ULONG ulRemainingOffset = m_ulSpaceAvailable - m_ulExtensionDataPosition;

UINT32 frameSize = ((UINT32)m_pWfExt->Format.wBitsPerSample / 8) * m_pWfExt->Format.nChannels;

UINT32 framesToCopy = ulRemainingOffset / frameSize;

UINT32 bytesToCopy = min(runWrite, framesToCopy * frameSize);

if (bytesToCopy > 0 && ulRemainingOffset >= bytesToCopy) {

RtlCopyMemory(m_pDmaBuffer + bufferOffset, m_pExtensionData, bytesToCopy);

m_pExtensionData += bytesToCopy;

m_ulExtensionDataPosition += bytesToCopy;

}

else {

DPF(D_TERSE, ("***No room to advance ulRemainingOffset %lu", ulRemainingOffset));

//Call tone generator instead copy data from audio file

m_ToneGenerator.GenerateSine(m_pDmaBuffer + bufferOffset, runWrite);

}

}

bufferOffset = (bufferOffset + runWrite) % m_ulDmaBufferSize;

ByteDisplacement -= runWrite;

6 - After data is loaded to dmaBuffer, I run the CaptureSharedEventDriven program again and capture audio from sysvad virtual microphone.

My intention with this test is to copy captured audio data to dmaBuffer and after it reaches the end, fill it

wiht noise by calling GenerateSine function.

I could achieve the goal, partially ![]() The problem is, the audio is there but it’s very distorced.

The problem is, the audio is there but it’s very distorced.

I’m not sure if the problem is in the logic to copy the captured data into the buffer, the process to generate the raw buffer or some misconfiguration caused by the changes I did on the microphone format pin.

Do you have any idea about what can be causing this distortion? Any information or documentation you have would help me a lot!

Thanks

How did you set the format? Are you dumping the format data to make sure Audio Engine has really agreed to your format? Is your wave data floating point data or integer? Since it’s 32 bits per sample, it could be either. The sine generator always generates integer data. If you want to send floats, you have to advertise that in the WAVEFORMATEXTENSIBLE, using KSDATAFORMAT_SUBTYPE_IEEE_FLOAT instead of KSDATAFORMAT_SUBTYPE_PCM.

Hi Tim,

Finally I could manage to get audio buffers from sysvad in real time and get notification on client app when other apps send data to sysvad speaker.

I’m using the following approach to capture audio data and save the audio data into a file:

From the client app:

1 - Create a thread and wait in a loop na event being signaled by the driver when a 8k buffer is completely filled with data from dmaBuffer

2 - Event signed, send a async IOCTL to get the data filled by the driver.

3 - Get the data received in a completion port thread with GetQueuedCompletionStatus function

4 - Copy buffer received into a final bigger buffer

5 - When the final buffer is totally full, save it in a wave file.

From the driver:

1 - ReadBytes calls a aux function I created, CopyBytes, to copy data from DmaBuffer to the private cyclic buffer.

2 - CopyBytes copies data from dmaBuffer until the cyclic buffer is full

3 - When cyclic buffer is full, sign the event to wake up the thread from client app and, get the data through async IOCTL

4 - If there’s any remaining bytes, copy them into the beginning of the cyclic buffer, increment the bytes copied

on buffer’s position and start the process over again.

The client app is getting buffers in the correct order but, when I join the buffers received together and save them in a wave file, there's a kind o "pop" sound between the buffers that deteriorates the final sound quality.

Strangely, I'm saving the cyclic buffer from driver's side by using the m_SaveData.WriteData() function and the sound it's OK.

Do you have any suggestion about what can be causing this problem?

Thanks,

The “pop” indicates that the data is not contiguous. I’m concerned about your “from the driver” steps there. You said you only signal the app when the cyclic buffer is full, but if there’s something remaining, you copy it into the beginning of the cyclic buffer. Doesn’t that mean you’ve now destroyed early bytes in that buffer that the application hasn’t read yet? The purpose of the circular buffer is to allow the river to continue to accumulate data until the app can pull its chunk out. Perhaps you should signal the app when the buffer is half-full or 3/4-full, so you have room to add additional data.

Thanks for your help, worked perfectly!

Hello gentlemen,

We are using Sysvad example to implement our noise remover.

We made a POC where a user program receives the Sysvad speaker stream and save in a file (insted of the file being saved by the Sysvad itself).

This is working fine.

However we are facing some difficulties to implement the microphone part. We tried to use the same logic as speaker but the result is not working as it should.

What we are doing initially is:

1 - the application sends a 800k buffer to the Sysvad (the WAV file data with 1 channel, 16 bits, 48000hz which is the same as the Sysvad microphone)

2 - in the WriteBytes method we use the ByteDisplacement as the number of bytes we copy from the buffer received to Sysvad m_pDmaBuffer

3 - start the Voice Recorder and record the audio received from the Sysvad microphone

But the recorded sound has poor sound quality, is full of gagging and glitches and at a certain moment, it looks like the noise of a modem connection.

Is there anything else we need to control?

Our WriteBytes implementation:

VOID CMiniportWaveRTStream::WriteBytes

(

_In_ ULONG ByteDisplacement

)

/*++

Routine Description:

This function writes the audio buffer using a received buffer from user application

Arguments:

ByteDisplacement - # of bytes to process.

--*/

{

ULONG bufferOffset = m_ullLinearPosition % m_ulDmaBufferSize;

ULONG runWrite = 0;

if (m_pExtensionData != NULL && m_pDmaBuffer != NULL) {

// Is a pointer to buffer's start that persists over each iteration

// and maps the audio starting point to be sent to the system

BYTE* sendIni = m_pExtensionData->MicBufferSendIni;

// Is a pointer to buffer's end that persists over each iteration

// and maps the audio ending point to be sent to the system

BYTE* sendEnd = m_pExtensionData->MicBufferSendEnd;

// maps how many bytes left in the buffer to be sent

runWrite = (ULONG)(sendEnd - sendIni);

while (ByteDisplacement > 0)

{

// ensure we never read beyond our buffer

LONG minWrite = min(ByteDisplacement, min(m_ulDmaBufferSize - bufferOffset, runWrite));

if (minWrite <= 0) {

break;

}

RtlCopyMemory(m_pDmaBuffer + bufferOffset, sendIni, minWrite);

bufferOffset = (bufferOffset + minWrite) % m_ulDmaBufferSize;

ByteDisplacement -= minWrite;

runWrite -= minWrite;

// slide the pointer to the next piece of buffer to be sent

sendIni += minWrite;

}

// slide the initial position to be sent in the next run

m_pExtensionData->MicBufferSendIni = sendIni;

}

}

Here is the MJ function part that receive the buffer from the application:

{

.....

// Reading client app input/output buffer information

systemBuffer = (BYTE*)_Irp->AssociatedIrp.SystemBuffer;

// the buffer size informed by the application

inputBufferLength = stack->Parameters.DeviceIoControl.InputBufferLength;

//Get pointer to the device extension reserved to store our data (copy of cyclic buffers)

ExtensionData = (PNOISE_DATA_STRUCTURE)((PCHAR)_DeviceObject->DeviceExtension + PORT_CLASS_DEVICE_EXTENSION_SIZE);

if (inputBufferLength > 0 && ExtensionData != NULL && systemBuffer != NULL) {

DPF(D_TERSE, ("***Input received of %lu bytes", inputBufferLength));

// copy the received buffer to our structure

RtlCopyMemory(ExtensionData->MicBuffer, systemBuffer, inputBufferLength);

// set the relative buffer end (is less or equal buffer size on our example)

ExtensionData->MicBufferEnd += inputBufferLength;

// maps the buffer's end position to be read on the WriteBytes function

ExtensionData->MicBufferSendEnd = ExtensionData->MicBufferEnd;

bufLen = inputBufferLength;

}

_Irp->IoStatus.Information = bufLen;

_Irp->IoStatus.Status = STATUS_SUCCESS;

IoCompleteRequest(_Irp, IO_NO_INCREMENT);

return STATUS_SUCCESS;

break;

.....

}

Is there any other thing we need to take care?

Thanks in advance!

André Fellows