Hello,

I am porting a driver from WDF to WDM, the codebase it’s entirely written in WDM, so I am wondering, I can make use of WDF objects and functions where there is no dependency from the WDFDRIVER or the WDFDEVICE? For instance, I have some routines using WDFWAITLOCK and WDFSPINLOCK, can I use those? is this a valid scenario?

I’ve looked for that across the forum but I wasn’t able to find a clear answer to this question, I see the scenario of having a WDF driver and using some WDM stuff, but I am trying to do the opposite of that.

Thanks!

I am porting a driver from WDF to WDM

Why on earth are you doing THAT? I think you’re the first person, ever, I’ve ever heard of doing such a thing.

I have some routines using WDFWAITLOCK and WDFSPINLOCK, can I use those?

No. But why on earthy would you want to? A WDFSPINLOCK is just a KSPIN_LOCK and a WDFWAITLOCK can be replaced by a KMUTEX. That should be reasonably easy.

ETA:

Instead of: WdfSpinLockAcquire call KeAcquireSpinLock

Instead of: WdfSpinLockRelease call KeReleaseSpinLock

Like that…

Peter

Hi Peter,

Thanks for the reply. I saw the document on the equivalent calls and was my first plan, but wanted to know if it was possible to do that. The reason is, we have a large driver and it’s written with WDM, we’ve got a reference driver to implement some feature on our driver based on the reference driver and it’s written with WDF, so I am porting the feature including the conversion to WDM.

Thanks for the advice!

… so I am wondering, I can make use of WDF objects and functions where there is no dependency from the WDFDRIVER

or the WDFDEVICE? For instance, I have some routines using WDFWAITLOCK and WDFSPINLOCK, can I use those? is this a valid scenario?

Well, taking into consideration that WDF is a framework, an answer to this question seems (at least to me) to be pretty obvious - you cannot use parts of a framework in a project that is not based upon this particular framework. Just think of it in terms of dependencies involved - basically, this is exactly the same thing as using the stuff from a header A without installing headers B, C and D that the header A depends on.

I see the scenario of having a WDF driver and using some WDM stuff

This is, indeed, possible, and it is called “stepping out of the framework”. Although there are no technical limitations here, you should always consider the possibility of getting into a conflict with the framework in question. The problem is that frameworks tend to make certain assumptions about your code, and may impose certain rules and limitations that it has to follow. Therefore, your every “breakout” has to be judged on case-by-case basis…

Anton Bassov

Hello Anton, actually I built the WDM driver and used the WDFWAITLOCK/WDFSPINLOCK and the driver works correctly, however I understand and I won’t use that approach. However I would like to ask the following question (to avoid creating another thread).

Following the document from Microsoft on the equivalent objects and functions for WDM and WDF I found that for the case of WDFWAITLOCK the correct way is to use KEVENT and KeWaitForSingleObject, but let’s suppose that I am running at DISPATCH_LEVEL and then I would call to KeWaitForSingleObject, if I don’t set a timeout it will hang the driver, so I want to properly understand what it’s the correct procedure to port the WDFWAITLOCK, is it assumed that I would set an arbitrary timeout or is not expected to be used at DISPATCH_LEVEL?

Thanks once again.

the case of WDFWAITLOCK the correct way is to use KEVENT and KeWaitForSingleObject

Nope. A WDFWAITLOCK is a Mutex, not an event.

but let’s suppose that I am running at DISPATCH_LEVEL and then I would call to KeWaitForSingleObject,

You can’t use WDFWAITLOCKs or any dispatcher objects at IRQL DISPATCH_LEVEL. You use spin locks when running st DISPATCH_LEVEL instead.

Peter

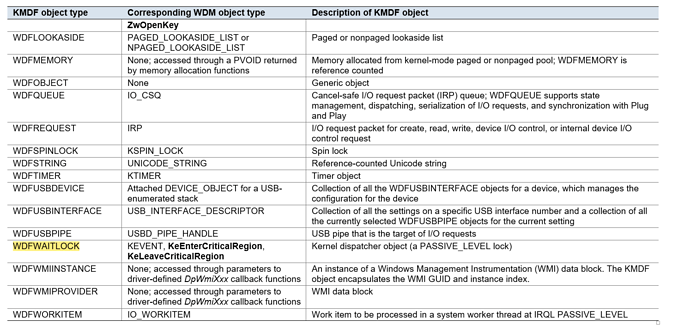

That clears the question, but I am confused, there is a document online about the equivalences between WDF and WDM and found this:

As you can see they mention KEVENT and that was the reason for me to ask this. I’ll follow the advice, in fact I already tried with KSPIN_LOCK and it does indeed work as I need. Thank you Peter.

As you can see they mention KEVENT

Yeah. I don’t know where that table is, or what it’s intending to convey… but a WDFWAITLOCK is not equivalent to a KEVENT. Think about it: Only one concurrent acquisition of a WDFWAITLOCK is allowed… that’s not how a KEVENT works.

Petrr

Lost_bit wrote:

Hello Anton, actually I built the WDM driver and used the WDFWAITLOCK/WDFSPINLOCK and the driver works correctly, however I understand and I won’t use that approach. However I would like to ask the following question (to avoid creating another thread).

It is worth noting that you can use KMDF features in an otherwise pure

WDM driver by using KMDF’s “miniport mode”, where it doesn’t try to

dispatch the IRPs.

https://docs.microsoft.com/en-us/windows-hardware/drivers/wdf/creating-kmdf-miniport-drivers

Hello Anton, actually I built the WDM driver and used the WDFWAITLOCK/WDFSPINLOCK and the driver works correctly,

To be honest, I am quite surprised that you did not encounter any compiling or linking errors - as it turns out, I put a foot in my mouth yet another time…

As you can see they mention KEVENT and that was the reason for me to ask this.

Please note that KEVENT is just a typedef for DISPATCHER_HEADER, i.e. a structure that all dispatcher objects, including KMUTEX, begin with.

Therefore, you can, indeed, use KeWaitXXX() with KMUTEX - there is no mistake in the table. However, you should not expect it to work as a mutex if you use it this way. Furthermore, I am not sure if you may use it as a binary semaphore either, because it may work as a notification event, rather than a synchronisation one, if you use it this way.

In other words, a call to KeWaitXXX() is perfectly legal in itself , but the semantics of this call may be different from the ones that you expect. This is what Peter tries to explain to you…

Anton Bassov

Thanks for your replies, and sorry for the long time without updates, was working on other stuff.

So what I need is a mutex/lock that works in PASSIVE_LEVEL without changing the IRQL. The KSPIN_LOCK changes the IRQL right? so what would be a good option in this case?

So what I need is a mutex/lock that works in PASSIVE_LEVEL without changing the IRQL. The KSPIN_LOCK changes the IRQL right?

so what would be a good option in this case?

KMUTEX seems to be the right way to go…

Anton Bassov

A whirlwind comparison of locks:

- ExAcquirePushLockExclusive: spins, synchronized at APC_LEVEL, unfair.

- KeAcquireSpinLock: spins, synchronized at DISPATCH_LEVEL, unfair.

- KeAcquireInStackQueuedSpinLock: spins, synchronized at DISPATCH_LEVEL, fair.

- NdisAcquireRWLockWrite: spins, synchronized at DISPATCH_LEVEL, rw-fair / ww-unfair, scalable.

- ExAcquireFastMutex: sleeps, synchronized at APC_LEVEL, fair.

- ExAcquireFastResourceExclusive: sleeps, synchronized at APC_LEVEL, fairness is optional.

- KMUTEX: sleeps, synchronized at APC_LEVEL, deprecated.

What’s the difference between sleeping and spinning? If the lock is contended, sleep means you let the processor do other useful work while you wait. But if the lock spins, then you get better latency on acquire. Spin when you expect low contention and minimal waiting. Sleep when you expect someone might have to wait for a long time. E.g., if you hold a lock while doing I/O to the registry, you should use a lock that sleeps. Don’t worry: most Windows “sleep” locks are smart enough to spin for a short while before actually sleeping, so they aren’t expensive if the lock contention is brief. Spinning is terrible for perf if a scheduler can yank the thread/procesor out from under the guy you’re spinning on; this is why there’s no public lock that spins at less than DISPATCH_LEVEL, and also why spinlocks need an enlightenment when run in a guest VM.

What’s fairness? A lock is fair if it is granted to threads/processors who ask for it in a roughly FIFO order. Unfair locks grant the lock to a random lottery winner each time it becomes available. I say the NDIS lock is “rw-fair” because between a reader and a writer, the lock is fair. I say it’s “ww-unfair” because between two writers, the lock is unfair. (But you shouldn’t use RW locks anyway if you are likely to have multiple writers.)

What’s a scalable lock? When one thread/processor is acquiring + releasing a read-lock, your processor will spend n nanoseconds inside the lock acquire+release APIs: this is undesirable overhead, but unavoidable. When two threads are acquiring + releasing a non-scalable read-lock, the processors might spend approximately 5n nanoseconds inside the lock APIs: the overhead goes up superlinearly as the number of processors goes up. In contrast, a scalable lock keeps the overhead at approximately n for any number of processors. Scalability is only meaningful for read-locks; by definition, you can’t really scale an exclusive (aka write) lock. So don’t spend the memory footprint of a scalable lock unless you really expect multiple readers spread across multiple proccesors.

What do I mean by synchronized at some IRQL? Code running at IRQL x can be preempted by code running at IRQL x+1. This preemption would cause a deadlock with any sort of locking scheme, unless you can get everyone who acquires the lock to agree to always be at a particular IRQL while holding the lock. This is why IRQL is tied up in all this. (The other, less important angle: you can’t sleep if IRQL >= DISPATCH_LEVEL, because of how the scheduler works. So you’ll note that all sleep locks are synchronized below it.) Using a “critical region”, you can hand-wave away the difference between PASSIVE_LEVEL and APC_LEVEL, so some locks will freely hand-wave there. There’s nothing about spinlocks themselves that require them to be synchronized at precisely DISPATCH_LEVEL; that’s just a fiction the NT kernel maintains to simplify coming to agreement on which IRQL to use; KeAcquireInterruptSpinLock is (behind the scenes) an example of a spinlock at a higher IRQL.

@anton_bassov said:

So what I need is a mutex/lock that works in PASSIVE_LEVEL without changing the IRQL. The KSPIN_LOCK changes the IRQL right?

so what would be a good option in this case?

KMUTEX seems to be the right way to go…

Anton Bassov

Seems that KMUTEX is deprecated, sadly.

@“Jeffrey_Tippet_[MSFT]” said:

A whirlwind comparison of locks:

- ExAcquirePushLockExclusive: spins, synchronized at APC_LEVEL, unfair.

- KeAcquireSpinLock: spins, synchronized at DISPATCH_LEVEL, unfair.

- KeAcquireInStackQueuedSpinLock: spins, synchronized at DISPATCH_LEVEL, fair.

- NdisAcquireRWLockWrite: spins, synchronized at DISPATCH_LEVEL, rw-fair / ww-unfair, scalable.

- ExAcquireFastMutex: sleeps, synchronized at APC_LEVEL, fair.

- ExAcquireFastResourceExclusive: sleeps, synchronized at APC_LEVEL, fairness is optional.

- KMUTEX: sleeps, synchronized at APC_LEVEL, deprecated.

What’s the difference between sleeping and spinning? If the lock is contended, sleep means you let the processor do other useful work while you wait. But if the lock spins, then you get better latency on acquire. Spin when you expect low contention and minimal waiting. Sleep when you expect someone might have to wait for a long time. E.g., if you hold a lock while doing I/O to the registry, you should use a lock that sleeps. Don’t worry: most Windows “sleep” locks are smart enough to spin for a short while before actually sleeping, so they aren’t expensive if the lock contention is brief. Spinning is terrible for perf if a scheduler can yank the thread/procesor out from under the guy you’re spinning on; this is why there’s no public lock that spins at less than DISPATCH_LEVEL, and also why spinlocks need an enlightenment when run in a guest VM.

What’s fairness? A lock is fair if it is granted to threads/processors who ask for it in a roughly FIFO order. Unfair locks grant the lock to a random lottery winner each time it becomes available. I say the NDIS lock is “rw-fair” because between a reader and a writer, the lock is fair. I say it’s “ww-unfair” because between two writers, the lock is unfair. (But you shouldn’t use RW locks anyway if you are likely to have multiple writers.)

What’s a scalable lock? When one thread/processor is acquiring + releasing a read-lock, your processor will spend n nanoseconds inside the lock acquire+release APIs: this is undesirable overhead, but unavoidable. When two threads are acquiring + releasing a non-scalable read-lock, the processors might spend approximately 5n nanoseconds inside the lock APIs: the overhead goes up superlinearly as the number of processors goes up. In contrast, a scalable lock keeps the overhead at approximately n for any number of processors. Scalability is only meaningful for read-locks; by definition, you can’t really scale an exclusive (aka write) lock. So don’t spend the memory footprint of a scalable lock unless you really expect multiple readers spread across multiple proccesors.

What do I mean by synchronized at some IRQL? Code running at IRQL x can be preempted by code running at IRQL x+1. This preemption would cause a deadlock with any sort of locking scheme, unless you can get everyone who acquires the lock to agree to always be at a particular IRQL while holding the lock. This is why IRQL is tied up in all this. (The other, less important angle: you can’t sleep if IRQL >= DISPATCH_LEVEL, because of how the scheduler works. So you’ll note that all sleep locks are synchronized below it.) Using a “critical region”, you can hand-wave away the difference between PASSIVE_LEVEL and APC_LEVEL, so some locks will freely hand-wave there. There’s nothing about spinlocks themselves that require them to be synchronized at precisely DISPATCH_LEVEL; that’s just a fiction the NT kernel maintains to simplify coming to agreement on which IRQL to use; KeAcquireInterruptSpinLock is (behind the scenes) an example of a spinlock at a higher IRQL.

Thanks for the extensive explanation, can I borrow a little more of your time to explain KeWaitForSingleObject ? Let me better explain the use case and why I am asking these questions. I have a driver to driver communication, this was using an interface (IRP_MN_QUERY_INTERFACE), so the other driver is calling my driver at passive level. Then the OS can send me a PnP notification that the interface is about to leave, this notification is also at passive level, so I need to wait for the other driver calls to finish before I can proceed to remove the interface, and I am searching for a mechanism to wait. Since I am at passive level I don’t want to raise IRQL, that’s why spins are not an option.

I am thinking of using a KEVENT, like in the functions from the other driver set KeSetEvent so I can use KeWaitForSingleObject from the removal call, so the functions will signal that they finished and then I can proceed to remove. Does it make sense?

Thanks in advance.

Jeffrey,

KMUTEX: sleeps, synchronized at APC_LEVEL, deprecated.

Well, judging from the link below, it does not really seem to be deprecated - at least there is no mentioning of it anywhere.

https://docs.microsoft.com/en-us/windows-hardware/drivers/kernel/introduction-to-mutex-objects

Furthermore, IIRC, unlike FAST_MUTEX, synchronisation with KMUTEX does not affect IRQL- after all, it is meant to be used with KeWaitXXX() . In fact, it may be not a bad alternative to both fast and guarded mutexes in the situations when the possibility of recursive locking is required.

OTOH, KeWaitForMutexObject() documentation seems to be, indeed, a dead link. Probably, they deprecated only KeWaitForMutexObject() while leaving all other KMUTEX-related functions valid and usable?

Anton Bassov

Thank ,you, and very much, Mr. Tippet for taking the time to write such a nice tutorial about locking mechanisms. What I like best about it is that what it gains in concision it does not yield in accuracy. A pretty damn terrific feat, I’d say.

A very good write-up by Mr. Ionescu from The NT Insider can be found here.

A much older, and less comprehensive, article here.

Thanks for the extensive explanation, can I borrow a little more of your time to explain KeWaitForSingleObject ? […] I am thinking of using a KEVENT, like in the functions from the other driver set KeSetEvent so I can use KeWaitForSingleObject from the removal call, so the functions will signal that they finished and then I can proceed to remove. Does it make sense?

Seems reasonable.

Well, judging from the link below, it does not really seem to be deprecated - at least there is no mentioning of it anywhere.

I guess “deprecated” for some weak definition of the word. At this point, a FAST_MUTEX is almost always superior than a KMUTEX. The only advantage I’m aware of for the KMUTEX is that you can shove it into a KeWaitForMultipleObjects along with a bunch of other stuff.

It’s not deprecated in the sense that you have to go remove it, or will get in trouble for using it. Just, given the choice, in new code, reach for a FAST_MUTEX first.

A very good write-up by Mr. Ionescu from The NT Insider can be found here.

Oh my goodness, how did I not know about that article? It is indeed excellent, and covers some points that I’d overlooked. I should have just referenced that. Although I have to complain that it overlooks our beloved NDIS RW lock – the most scalable spinlock in the kernel

At this point, a FAST_MUTEX is almost always superior than a KMUTEX

Well, FAST_MUTEX synchronises at APC_LEVEL, but the OP is looking for something that does not change IRQL. Therefore, KMUTEX seems to be the way to go. OTOH, I am not sure whether such a requirement is really justified - judging from everything the OP has said in so far he does not really seem to be that experienced with Windows KM development. Therefore, his requirement may well be more

of an opinion/ perception, rather than of a sound and well-founded technical requirement…

Anton Bassov

Hi, thanks all for the help.

Indeed I am not that experienced in KM development but I am trying to catch up. The requirement of the Passive Level comes from the agreement with the other driver that all the calls between us (Driver 2 Driver) are going to be kept at Passive Level, because the calls from the other driver to our Driver code comes from an Escape call.

I ended up using KEVENT to control the flow, and so far is working, from the outstanding calls I signal to FALSE, do the work, then signal to TRUE. When a change on the OS happens and I need to remove communication with the other driver, I am waiting to the signal to be TRUE and then continue to remove. So far KEVENT related functions like KeClearEvent and KeSetEvent, temporary raise the IRQL but it lowers the IRQL again before endind the call, same seems to be for KMUTEX. Let me know if my assessments are correct.

So just to complete the assessment, the reason I cannot raise the IRQL is because the escape call is pageable memory,if I raise the IRQL I may hit a bugcheck.

the reason I cannot raise the IRQL is because the escape call is pageable memory,if I raise the IRQL I may hit a bugcheck.

The only thing that APC_LEVEL means is that the code running at APC_LEVEL cannot be invoked recursively. For example, recursively calling the code that acquires a FAST_MUTEX will result in a deadlock, because fast mutex acquisition involves an atomic test-and-set, and,hence, cannot be done recursively. Another example is the situation when you are on the paging IO path to the pagefile. In such case, incurring a page fault means a page fault cannot be handled without causing another page fault. However, this applies only to the code that appears on the paging IO path, so that accessing pageable memory at APC_LEVEL does not cause any problem in all other cases, including yours. Once FAST_MUTEX synchronises at APC_LEVEL, you can incur as many page faults as you wish while holding it( unless you happen to be on the paging IO path to the pagefile, of course) .

In other words, as I have said already, your “requirement” is nothing more than just an erroneous perception…

Anton Bassov